Experience

3 Years of creating the largest location-based VR Experiences

I worked at DIVR Labs on all three of their experiences: Golem, Arachnoid, and Meet the Dinosaurs as both VFX artist and Game Designer.

This taught me a lot about the unique challenges of creating effects for VR.

2 Years of crafting unique visuals of Extinction Protocol

(Maximum quality and full-screen recommended for the trailer since all game sequences are done in-engine)

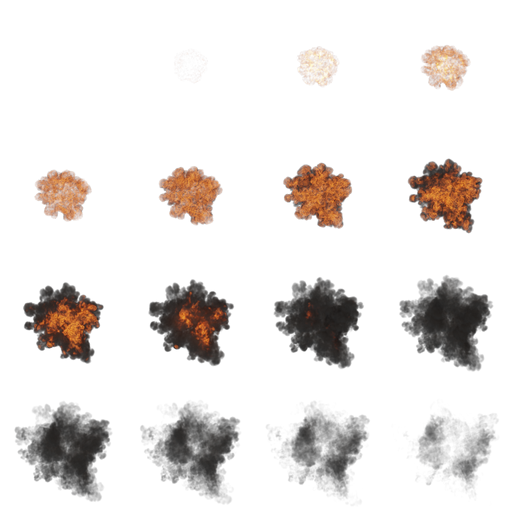

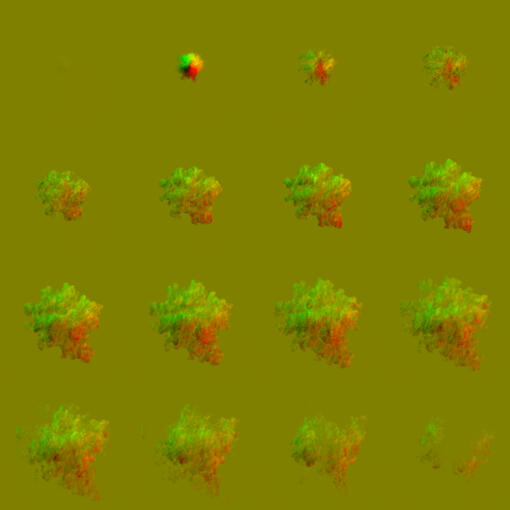

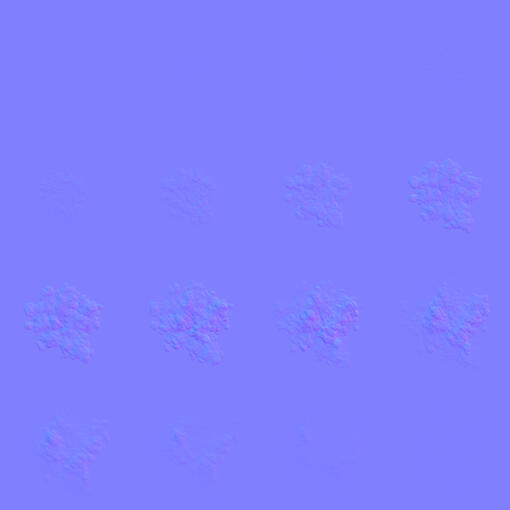

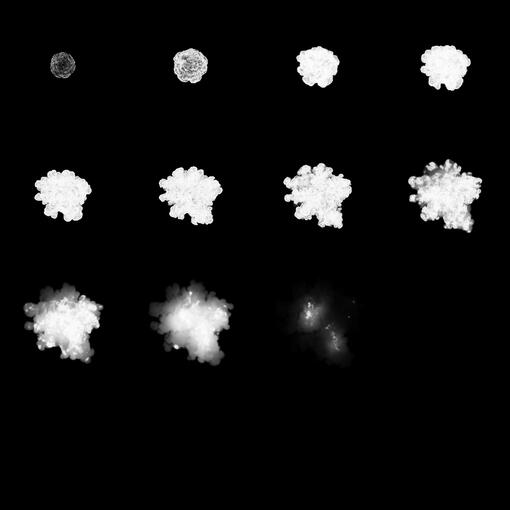

VR Effects

Sidenote: These effects are optimized for VR and even for specific headsets at times, so they may look slightly worse here

Achievement Visualization

Over the course of the game, the main mission is to collect traces of unknown dinosaur and we're showing them all the stages they managed to discover.

Loading Projected Dinosaurs

For the purpose of shooting selfies, we're materializing dinosaurs all around the players.

Time-travel portal

I wanted to give the players time to feel and admire the process of returning back to their time.

Backend

It's actually just one mesh (and two copies for "particles" close to the player) that's being manipulated at runtime.

Look-through portal

At first, we tried an opaque Stargate-like portal and while we liked the new solid feel, we decided to blend it with the other side for game design reasons in the end.

Scanning

It took a few iterations to get the right feeling of extracting information from the objects over time and match it with appropriate glove feedback.

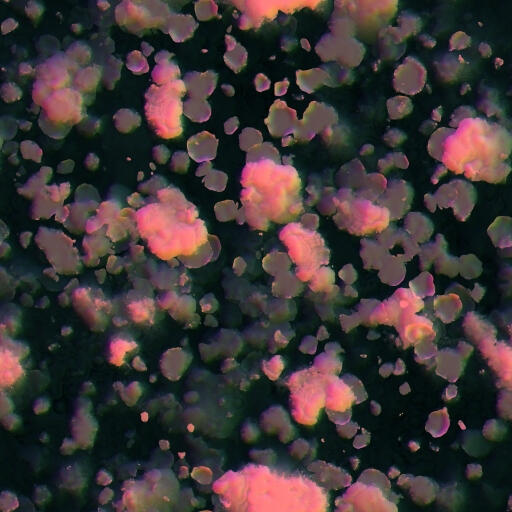

Interactive Mushroom

when touched, contracts around the collision point and emits dirty wet smoke that gets stuck on the player's suit.

Uses baked vertex data and a single RGBA texture for the camera overlay.

Flowmapped Skybox and Smoke

The shader turns any regular skybox into animated one while moving it in a much more natural flow than just panning. Based on a GDC talk by Naughty Dog.

The volcano smoke uses a flowmap too, blended with a noise texture.

Three-dimensional Hologram

Using a customizable number of parallax layers "inside" the mesh, I was able to create a great feeling of volume, instead of being just a surface effect that doesn't look persuasive enough in VR.

It also compensates for camera distance to avoid aliasing artifacts.

Lack of animator's time calls for creative solutions

I managed to animate several animals using two mesh states and blending between them in vertex shader.

Control is everything

As you can see, different body parts move with different speed and to sell it better, it's blended with appropriate movement along selected axes.

Teleportation effect

Watching your friends (and moments later yourself) being teleported not at once, but bit by bit is a memorable experience.

Electric shock

In Arachnoid, the players get this stylized feedback when making wrong interaction with the puzzle.

Radar Interface

Follows world position of an object and thanks to

a seamless texture it has no area limitation.

Local Radial Blur

Blends between a mesh and three pre-blurred textures depending on the speed

Chaperone walls

Since ease of use for level designers was crucial, these are simple quads that adapt to any placement and size.

Magic light

In our first game, the players had to pick up a glowing magic substance that let them discover hidden signs.

Hand scanner feedback

Glass material that needed to be visible enough

Lava Tunnels

Glitchy monitors

2D Effects

Water System

Since there is a lot to reflect, we chose the screenspace approach. Using two render textures, it lets us place several "water spots" in the level, each with its own characteristics.

It supports foreground elements that are excluded from the reflection and even allows for floating objects that are themselves reflected.

Also, since the textures for the effect are created in Substance Designer from the same base, the wave normals and ripples feel very consistent.

What's more, each texture has two variants (encoded in free channels) between which they blend to feel more dynamic.

Recently, I updated the water spots with an option to include customizable rain ripples.

Sky and Fog

In order to optimize overdraw and particle counts, both the sky and fog are just a quad using just two textures smartly blended several times with various sizes, rotations, etc.

All the objects in the background use the same material which gives them the correct color.

And since the weather changes, both sky and background material use a manager to blend between defined states.

Background Lights

To break the homogenity of the background, custom lights are stored as vector arrays and besides usual properties like range and color can have customized aspect ratios and be aimed towards the player as needed.

Custom Light System

We couldn't use the built-in light system as the performance cost increase exponentially in the Forward rendering pipeline.

Instead, we use two render textures for light direction and color and then we're free to process it however needed.

Energy shield

Laser beam

Hologram

Interactive Glitch

EMP Storm incoming

Units stunned by EMP

Pulse System

Without a proper use-case at the moment.

(note that the artifacts are only present in this preview)

Since we use lots of emissions that behave differently, we needed a system to merge them all into ideally a single sprite with a single pulse texture.

Unlike other solutions, this allows for the same spot on the texture to be repeatedly emitting during a single loop.

Hopply

As a small freelance job, I implemented water reflections (using sprite duplication that's cheaper on mobile) and underwater/swimming visuals on both shader and C# side.

All displacement is done on the vertex side using grid meshes for the sprites.

Other

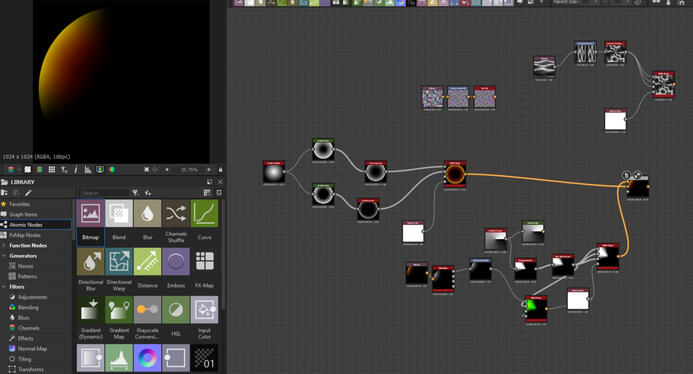

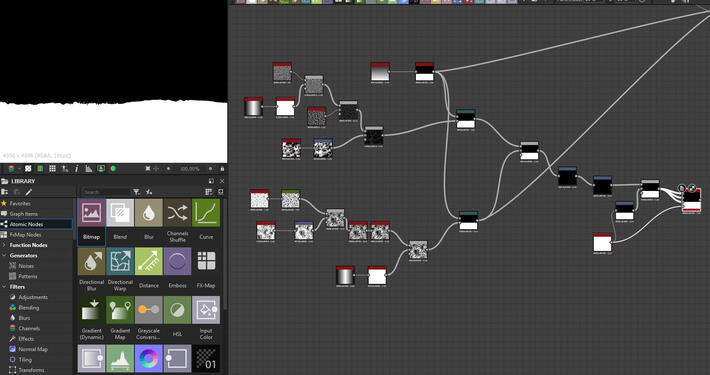

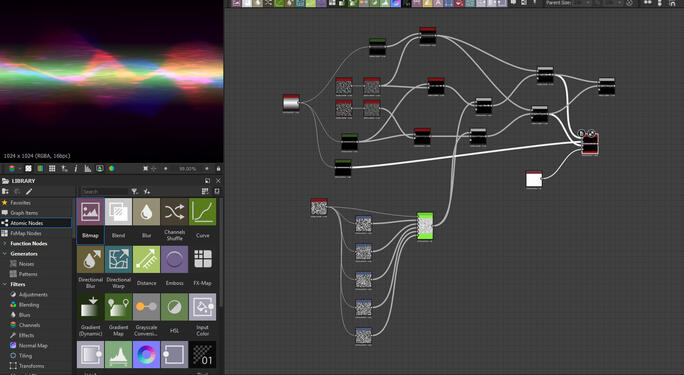

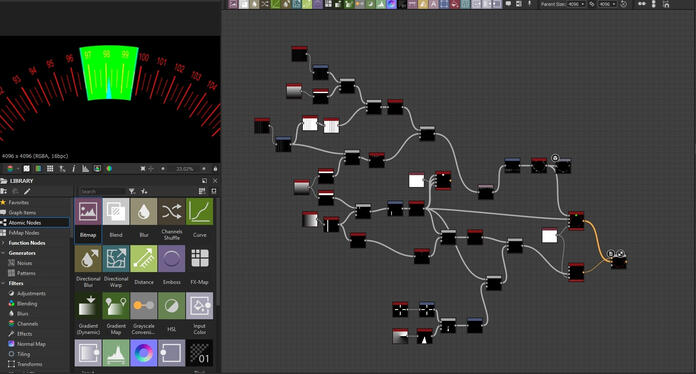

Substance Designer - a tool of choice for procedural textures and operations

Photoshop is a wonderful software, but whenever I need seamless textures, advanced noise, lots of time-efficient variance or technical precision, Substance Designer is much better solution. Also, it's a real time-saver when iterating on complex textures.

Scripts & Tools

Not everything I did has a flashy way of showing it, so I'm gonna at least tell you about it...

Mesh baker that saves individual origins to vertex color so many parts of a single mesh can still be interacted with individually

Mesh baker that saves the difference between two models into vertex color

A simple tool that allows sprite shape animation

Tool that allows sprite shape animation

Many particle/animation-related scripts & managers

Lost in Time

Since not all work ends up in the final product, I don't have visual material for these, but had a lot of fun implementing these and was proud of their result.

Simple decals and projectors

Depth fog shaders

Swamp footsteps solution

Environment dirt accumulation shader

Toon shader

And that's about it

If you'd like to talk more or check out my LinkedIn, let me save you a few precious seconds.